So if we keep talking about Computer Science, I suppose we should get around to defining it. This is tricky because it’s a new field. Much like the English major of the nineteenth century, you could argue that Computer Science is the sum total of the knowledge of computing, and we have a major because now there’s too much of it to assume that an “educated person” would know everything you need to know about computing because they have an advanced degree.

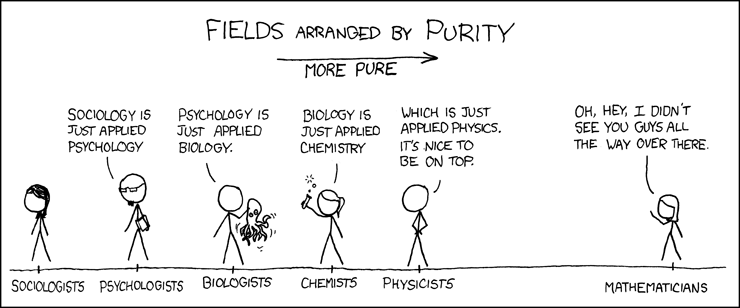

To make matters worse, the field spreads itself over the mighty STEM trinity of Math, Science, and Engineering. For most scientific fields, the practice is actually split on these lines. If you study partial differential equations in all their frustrating abstract glory, then you are a mathematician. If you apply them to a model of a physical system that you are trying to observe or predict, then you’re probably a physicist. If you’re using that empirically verified mathematical model to launch a rocket into orbit, then you’re an engineer.

Today Computer Science departments are covering all possible topics in computing including the theoretical math underpinning computation, the empirical science of computing, software engineering, and hardware engineering. There is an old saw that if you need to put “science” in the name of your fields, then it isn’t science. That’s clever, but we plan to dispel that particular view of Computer Science. Our opinion is that Computer Science as practiced today is a science - and a whole lot more.

Let’s begin with the math. One of the astonishing properties of theoreticalcomputer science (often referred to by the initialism TCS by the computerati) is that, well, there is such a thing as a mathematical basis for computation. When David Hilbert proposed the now famous Hilbert Program, it wasn’t universally acknowledged that there was such a thing. We now have formal theories of what it means to compute something and what it means for a computation to be complex (or difficult). If you’ve heard names like Gödel, Turing, Shor, Goldwasser, Shannon, or Cook (and this is a small sampling) then you know how famous the field has become. Heck, there’s even been a critically acclaimed, award winning, box office hit movie made about Alan Turing (who helped found the entire field of TCS 1 in general and Computability in particular).

We now have formal theories of what is means to compute something and what it means for a computation to be complex (or difficult).

Theoretical computer science is deeply related to other fields of mathematics. In fact, one of the seven millennium Prize Problems is also the big question in TCS. (That’s the “P vs. NP Problem”, and it needs it’s own blog post.) Graph theory, discrete math, combinatorics, polynomials, and a glut of other mathematical disciplines are so intertwined with TCS that the computer scientists doing the most theoretical work are indistinguishable from mathematicians (in a good way).

Like physics, an undergraduate education in computer science requires a bit of math. Your average university major in CS will require Calculus, Discrete Math, Linear Algebra, Probability, and Statistics. That’s on top of the application of those mathematical disciplines in the CS-specific classes. After all that, students are generally also required to a theoretical computer science that focuses on Computability and Complexity.

That’s all great, but what does it mean?

It means that the theoretical foundations of computer science are pretty cool. Computer science, when stripped down to it’s theoretical core, is the study of information. That might not sound exciting at first, but try and give a thorough, rigorous definition of information. Go ahead, I’ll wait…

Pretty tough isn’t it? You have an intuitive idea of what that word means, but try defining it with math and without using words like “data” or “knowledge”. And while we’re at it, what is computation anyway? Without going in to details, TCS starts with the idea that we want to concern ourselves with computation. We want a question that we can encode as a finite series of zeros and ones, we should be able to find an answer to the question in a finite number of steps, and we should be able to report that answer encoded like the question (in a finite series of zeros and ones). This is what the seminal works of Alan Turing and Alonzo Church got us (there were other researchers involved, but that’s a story for another day).

Let’s connect some of that to terms a modern audience might recognize. For our purposes, let’s call the question “input”, the process for finding an answer a “program”, and the answer itself “output”. Starting to sound pretty familiar, right?

How complicated can it all get? More than you might imagine after we described the whole setup in a couple sentences. For instance, it turns out that you can think of problems that you can’t answer given our paltry constraints. In fact, one of the most famous is Turing’s halting problem: given some input and a program, will the program actually stop and give us some output? Remember that we’re talking about being able to handle any possible input/program combination, and that means that some programs won’t ever stop processing. Turing provided a proof that there is no general purpose way to provide an answer for any given input/program pair.

Some programs won’t ever stop. But what about all the processes that will stop? Well, it turns out that if we rephrase our question we can do something about those. The trick is to ask “can you please list every problem solving process and question pair that will stop?”. Why, yes I can!

Turing provided a proof that there is no general purpose way to provide an answer for any given input/program pair.

Let’s think about that for a second. We have a mathematically sound proof that says you can’t give a “yes or no” answer to some questions (“does this thing halt?”). We also know that you can alter some of these yes/no questions and list all the possible “yes” instances.

We can organize these programs into teams. We’re going to call these teams “sets” (that’s what the math people do). So we have three kinds of sets:

-

Sets for which we can write a program that can take any input and tell us if that input belongs to the set. Let’s call these sets identifiable. 2 This would include sets like odd numbers and words with 7 letters.

-

Sets that aren’t identifiable but that are still describable somehow. These are the sets whose members we can list with a program. We’ll call them list-able 3. This includes the set of programs that halt.

-

Set that are neither identifiable nor list-able. This includes the set of programs that don’t halt.

I don’t know about you, but that blows my mind. We can describe problems and sets that we all understand and agree about BUT that no computer program can identify or list. They’re aren’t even that esoteric by most standards. Ask any programmer if they’d like a way to identify all programs that get hung in an infinite loop. You know what’s even crazier? We found those sets with nothing but math and logic, and they don’t depend on what kind of computing we’re talking about. Nope, not even your super fancy new Mac can handle some sets.

The astute reader might be asking “well, can’t we list the processes that don’t halt as well”? That would be nice, but no. If we could you would be able to list everything until you found a program that matched one list or the other - and that would make the set identifiable! We already have a proof stating that this is impossible, so even listing programs that don’t halt is out of the question.

We can describe problems and sets that we all understand and agree about BUT that no computer program can identify or list.

These are mathematical truths we’re dealing with - I mean, we DO call it Theoretical Computer Science. What about the science part? Let’s begin with the so-called “two legs of science” – theory and experimentation. Let’s also assume that Computer Science has plenty of theory. (On top of all the mathematical basis we’ve been talking about, there are all kinds of other theoretical parts of computer science like networking, programming languages, and on and on.) But what about experimentation?

There is certainly a lot of empirical work in Computer Science these days, but the real question is “how much is scientific experimentation and how much is engineering?” The fact that the computer science researchers tend to describe themselves as either “theory people” or “systems people” doesn’t help since “systems” could go either way. How about we start with if empirical experimentation would be a good thing for computer science?

Imagine Bob, the theoretical CS researcher. Bob has been working on a proof about a special kind of networking. Let’s not get in details, so we’ll say that his proof says that for certain kinds of networks there is a certain kind of algorithm that guarantees a certain amount of performance. Bob is super excited because his new performance bound is now the best for this kind of network. But there’s something missing there - did you notice? We didn’t mention an actual algorithm - his proof says that a certain kind of algorithm is out there somewhere. This kind of existence proof happens in TCS often.

Enter Alice, the algorithms researcher. She sees Bob’s paper and actually comes up with an algorithm of the kind Bob describes. At that point she has actually advanced the theoretical state of the art, but she doesn’t stop there 4. Since there’s now a specific algorithm, Alice decides to implement that algorithm by writing a program in a specific computer programming language. And now we have an experiment. Alice can run her program, test it’s performance in a variety of settings, and compare her results to exhaustively studied algorithms 5.

This is the kind of empirical work that happens all the time in computer science. It turns out that humans are bad at knowing what will happen in complicated systems. Really, really bad. That’s why experimentation is so important in all the sciences. When it comes to a new computer system, we often need to build them to know how (and if) they’ll actually function.

Computer science can also offer new forms of experimentation. Namely, computer simulations. There’s a cadre of computer scientists that feel computational modeling is something separate from theory and experimentation. Let’s sidestep that issue for right now. What’s important is that there are some scientific theories that you will never be able to reproduce and almost certainly will never observe. Like, say, everything in cosmology. No one wants cosmologists to create two black holes and smash them together, and no one knows how to launch cosmologists a million light years away to observe something like that. It turns out that they can create and “observe” computer models of two black holes running in to each other, so no one has to get dropped into a black hole.

Is that even helpful? Well, yes. First it allows them to verify expected outcomes. If the outcome from the computer model doesn’t seem right, and they’ve double-checked the computer model, then it’s possible they’ve discovered a new insight. If the model works out as expected then they’ve got a little closer to confirming their hypothesis. This might not sound important, but computer modeling and simulation is now a core practice in most (if not all) scientific communities. In fact, one of the first uses for computers was something called Monte Carlo simulations used by the United States to help design atomic weapons.

If that’s the science part, what’s left for the engineers? We’ve leave a great deal of that for another post, but the engineering side of Computer Science is generally what everyone interacts with every day. Even if we dump all hardware into electrical and mechanical engineering (which a lot of computer engineers would disagree with), you still have all the software around you. If something in your life plugs in to a wall, uses batteries, or has a solar panel, then it almost certainly has some software. When you google, IM, email, kik, slack, text, snapchat, instagram, or tweet you’re using software. And software engineers 6 built it all.

Which brings us to the end of our quick summary of computer science. It’s a pretty big topic, and we’ve merely scratched the surface. We’ll get a chance to explore some more in coming posts.

References

Links used in this article (or that you might want to check out):

- Church: https://en.wikipedia.org/wiki/Alonzo_Church

- Computer Science: https://en.wikipedia.org/wiki/Computer_science

- Computing and the Manhattan Project: http://www.atomicheritage.org/history/computing-and-manhattan-project

- Cook: https://en.wikipedia.org/wiki/Stephen_Cook

- Godel: https://en.wikipedia.org/wiki/Kurt_G%C3%B6del

- Goldwasser: https://en.wikipedia.org/wiki/Shafi_Goldwasser

- Hilbert’s Program: https://en.wikipedia.org/wiki/Hilbert’s_program

- millennium Prize: https://en.wikipedia.org/wiki/Millennium_Prize_Problems

- Monte Carlo: https://en.wikipedia.org/wiki/Monte_Carlo_method

- Science Two Legs: http://cacm.acm.org/magazines/2010/9/98038-science-has-only-two-legs/fulltext

- Shannon: https://en.wikipedia.org/wiki/Claude_Shannon

- Shor: https://en.wikipedia.org/wiki/Peter_Shor

- Theoretical Computer Science: https://en.wikipedia.org/wiki/Theoretical_computer_science

- Turing: https://en.wikipedia.org/wiki/Alan_Turing

- Turing movie: http://www.imdb.com/title/tt2084970/

- See what I did there? ↩︎

- In TCS, we actually call these recursive, computable, or decidable. ↩︎

- In TCS, we call these recursively enumerable, computably enumerable, semi-decidable, provable, or Turing-recognizable ↩︎

- although she might pause there and publish a paper ↩︎

- Yes, here is where practicing computer people will start explaining about benchmarks and how they’re hard or impossible. It’s my hypothetical example - lay off ↩︎

- Yes, some would dispute the use of the term engineer, but that’s a topic for another day ↩︎

- "Purity" image from XKCD under a Creative Commons Attribution-NonCommercial 2.5 License. ↩︎